Autonomous Driving Via Imitation Learning and Optimization

Contents:

Introduction

Autonomous driving, as a convenience-enhancing technology, has attracted a great amount of research efforts in both academia and industry for its potential benefits on safety, accessibility, and efficiency.

Typically, autonomous driving systems are partitioned into hierarhical structures including perception, decision-making, motion planning and vehicle control. Among them, planning and control are two core yet challenging problems that are responsible for safety and efficiency. They should

- comprehensively consider all possible constraints regarding safety and feasibility (kinematically and dynamically) based on the perceived environment in-formation and reasonable prediction of other roadparticipants’ behaviors;

- generate optimal/near-optimal maneuvers that providegood driving qualities such as smoothness, passengers’ comfort and time-efficiency;

- solve the problem within limited runtime to timely respond rapid changes in surrounding environment.

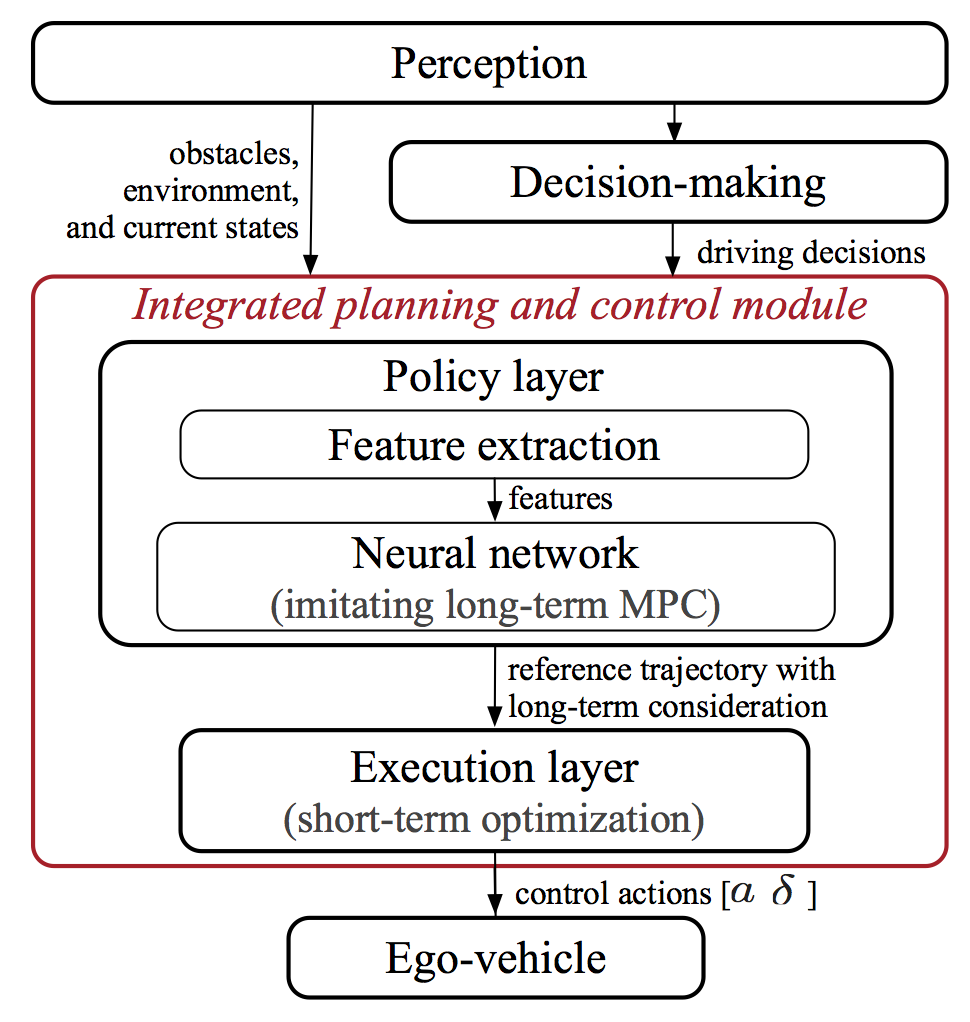

To simultaneously address the above challenges, we propose a two-layer hierarchical structure that combines imitation learning (Policy Layer) and optimization-based (Execuation Layer) methods, as shown below.

The Policy Layer in the hierarchical structure is represented by a neural network that is trained via imitation learning, and the Execuation Layer is a short-horizon optimization-based reference tracking controller. In the training phase, the expert data can come from either offline long-term optimization (for example, long-term Model Predictive Control, a.k.a, MPC) or real human driving data. In the test phase, the output of the Policy Layer is given to the Execuation Layer as an initial reference trajectory that the Execuation Layer will try to follow but with final check for collisions. Via such combination, we can generate a long-term trajectory with low collision probability and execute safely a short-term action fastly.

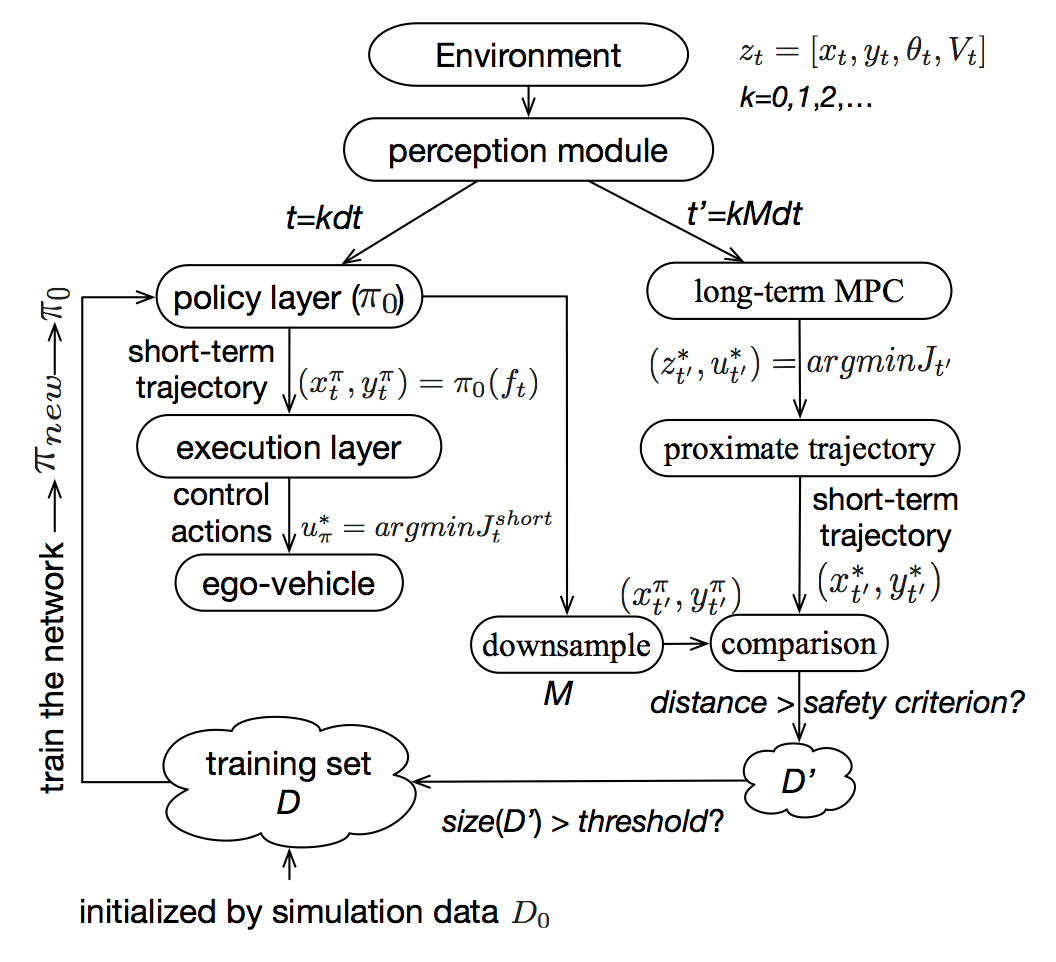

Moreover, to continuously improve the learning performance of the neural network in Policy Layer such that the long-term trajectory can be collision-free with higher and higher probability, we propose to use a _Sampled DAgger_ process, as shown below.

Demo Videos

Researchers

| Liting Sun | Graduate Student | Email Link | |

| Cheng Peng | Graduate Student | Email Link | |

| Wei Zhan | Graduate Student | Email Link |

Publications

L. Sun, C. Peng, W. Zhan, and M. Tomizuka, “A Fast Integrated Planning and Control Framework for Autonomous Driving”, in proceedings of the 2018 Dynamic Systems and Control conference (DSCC2018), Sept 30 - Oct 03, Atlanta, 2018.