Autonomous Driving Group

Contents:

- Introduction

- Facilities and Datasets

- Activities

- Research Topics

- Joining Our Group

- Members and Contact

Introduction

Our research covers full-stack autonomous driving, including the onboard modules such as perception, prediction, planning and control, as well as key offline components such as simulation/test, and automatic construction of HD maps and data. The efforts have been greatly supported and intensively verified by the facilities including autonomous vehicles, data-collection vehicles, and driving simulators. We also constructed datasets by our own, such as INTERACTION dataset for behavior/prediction and UrbanLoco dataset for localization/mapping. Our recent progress has been published in flagship conferences in the fields of robotics (ICRA, IROS), computer vision (CVPR, ECCV), machine learning and AI (NeurIPS, AAAI), intelligent transportation (ITSC, IV) and control (ACC, IFAC) and corresponding top-notch journals. We received several Best (Student) Paper Awards/Finalists from IROS, ITSC and IV.

|

|

|

|

| Perception: 3D/2D detection, segmentation, LiDAR-camera fusion, evaluation, tracking, inference. | Mapping and localization: Automatic construction of HD map, point cloud map construction and localization. | Behavioral data, simulation and test: Behavior dataset construction, closed-loop test, scenario/behavior generation, human-in-loop test. |

|

|

|

|

| Prediction and behavior generation: interactive prediction, representation, generalization, behavior understanding and generation. | Decision and behavior planning: interactive decision-making and planning under uncertainty, incorporating learning and model-based methods. | Motion planning and control: safe and efficient trajectory planning, autonomous racing, policy transfer, vehicle dynamics and control. |

|

|

|

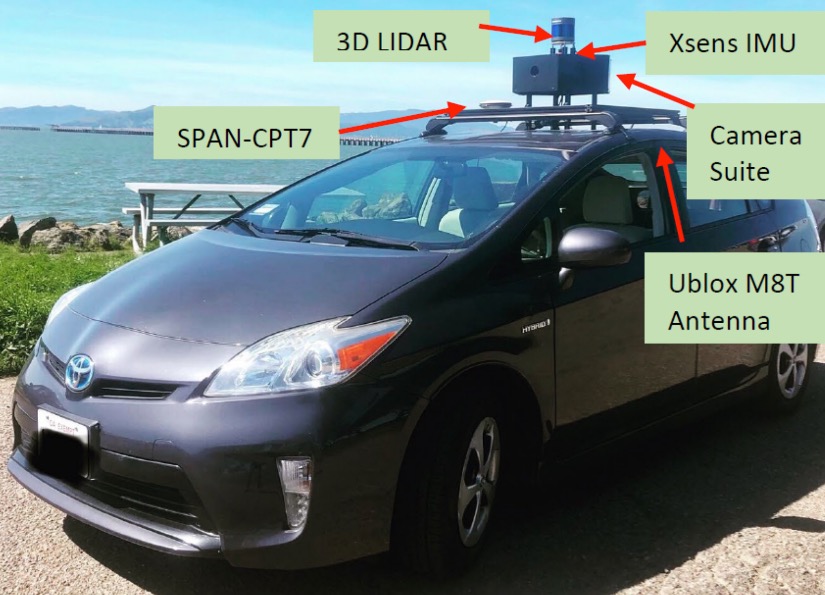

| Data-collection vehicle: with a high-end navigation system, and a LiDAR calibrated and synchronized with 6 cameras. | Data collection and tests in simulation: Two sets of driving simulator interfaces composed with parts from real vehicles. | Autonomous vehicles: Our algorithms were tested on autonomous vehicles in test fields supported by our sponsors. |

Research Topics

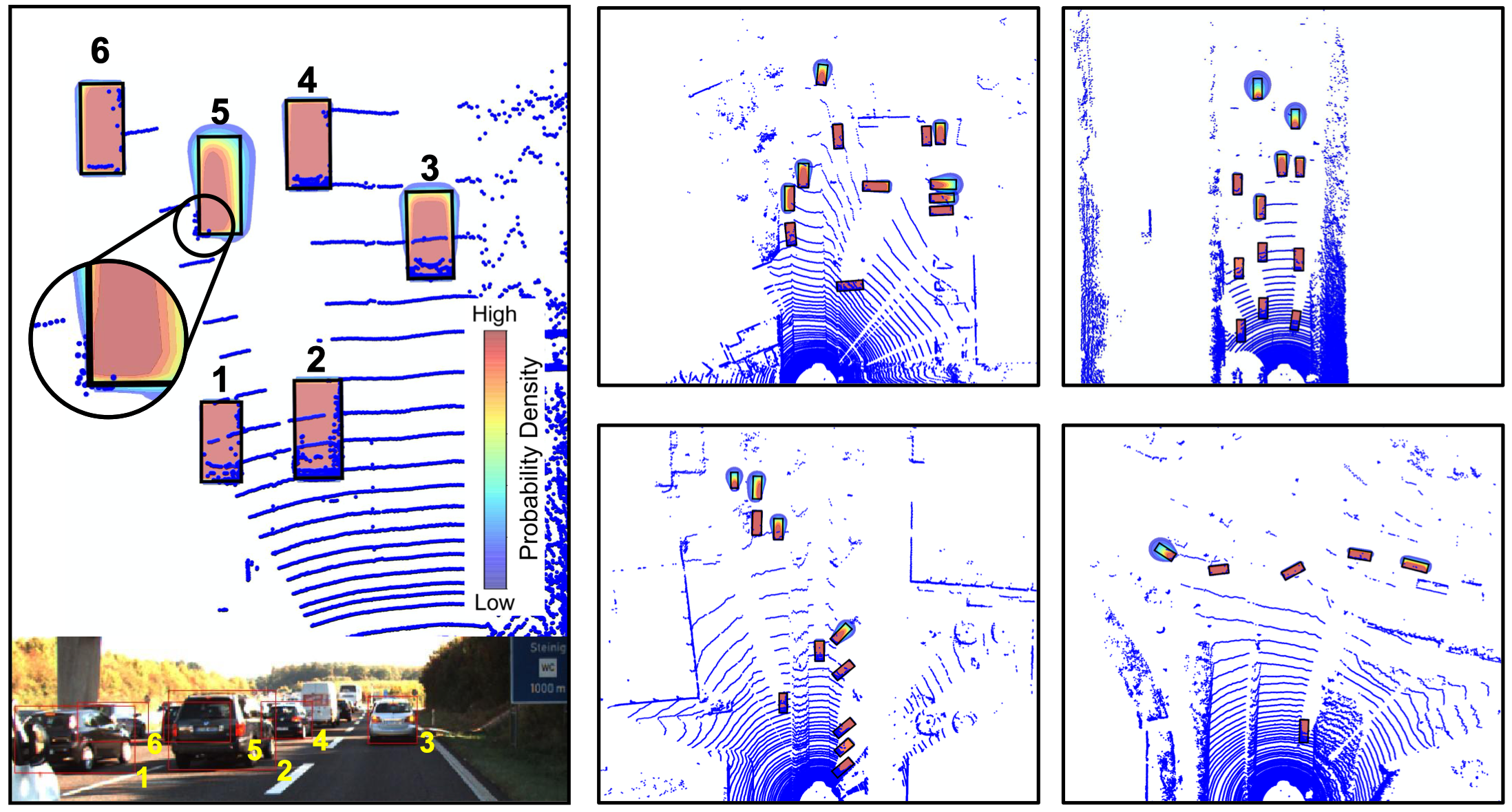

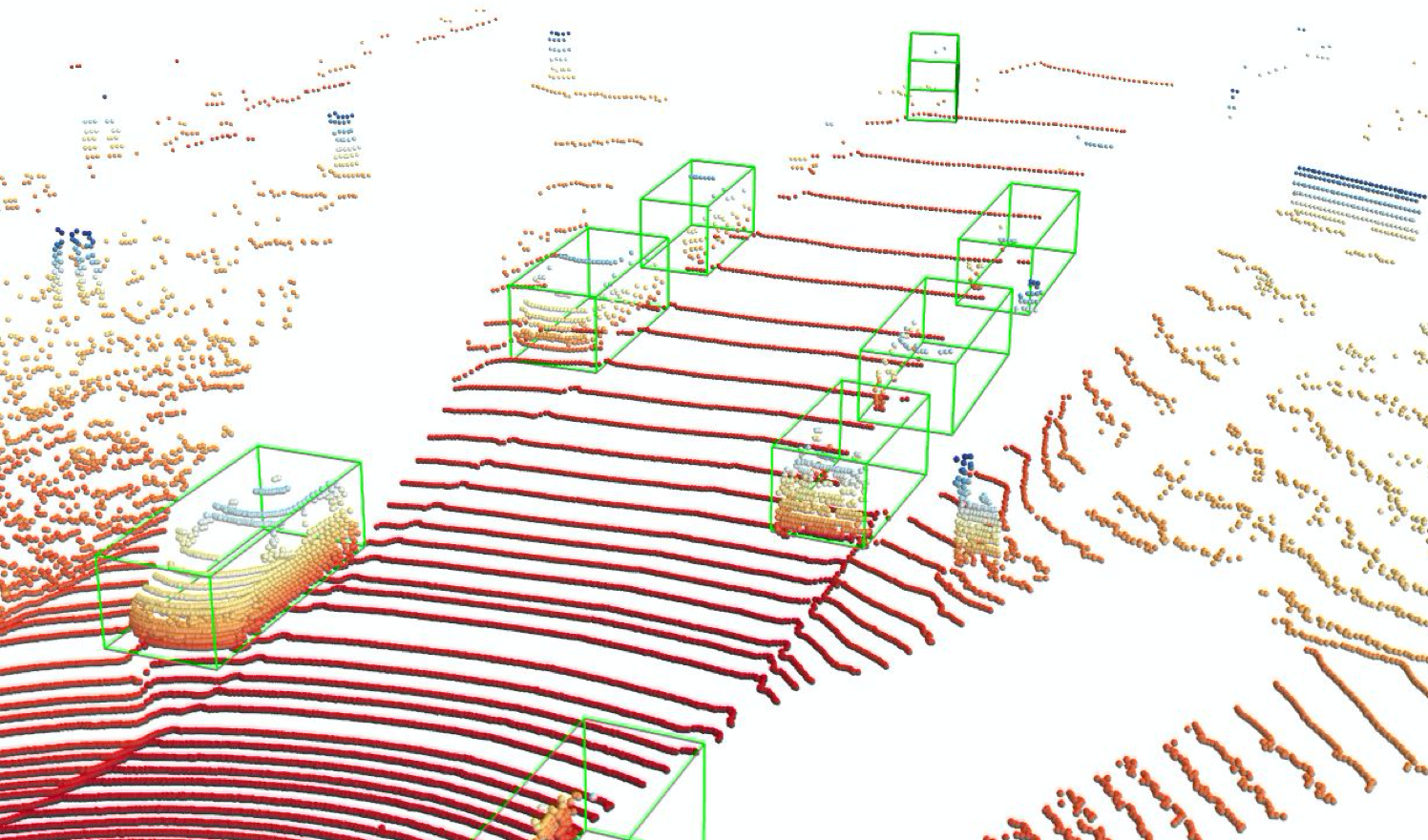

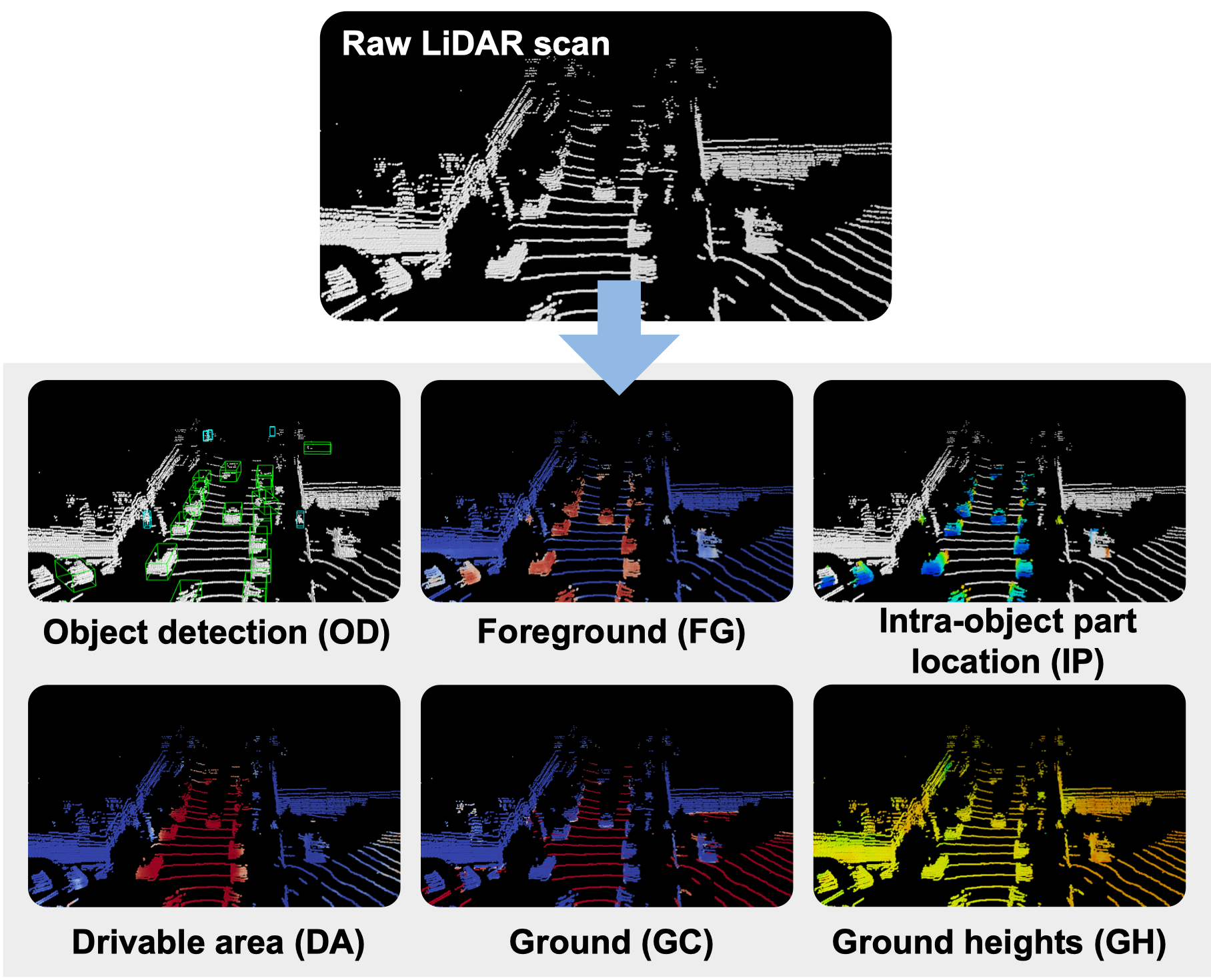

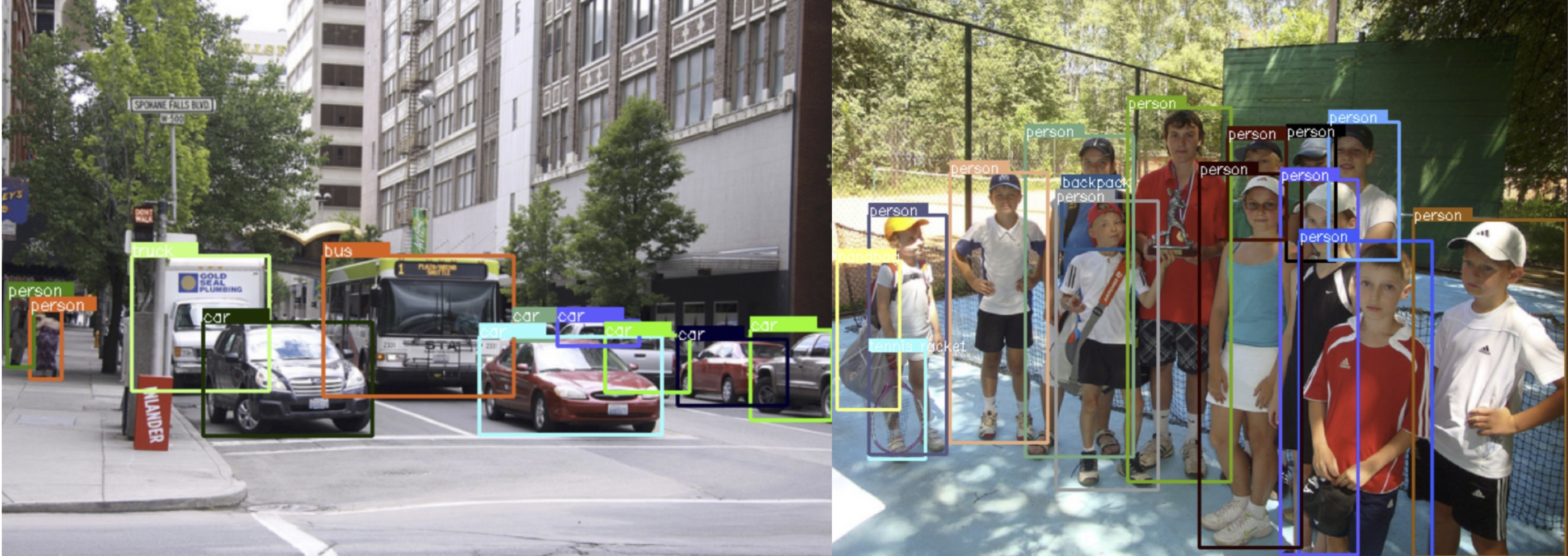

1. Perception

Perception is a prerequisite for autonomy. 3D perception based on LiDAR (and camera) is our major focus. We are interested in incorporating temporal information for 3D perception and its synergies with downstream modules such as prediction. Computationally efficient and data-efficient 3D perception is also addressed with novel structures, self-supervision and multi-task learning. Early-stage and cascade LiDAR-camera fusion approaches are proposed for 3D detection. We also dive into fundamental problems in 3D detection by analyzing and inferring uncertainties of the data annotations, as well as evaluating and improving probabilistic 3D detection. In addition to 3D perception, new structures are proposed for 2D detection, segmentation and crowd count. We also emphasize occluded object tracking and inference via the behavior of the surrounding agents of the object.

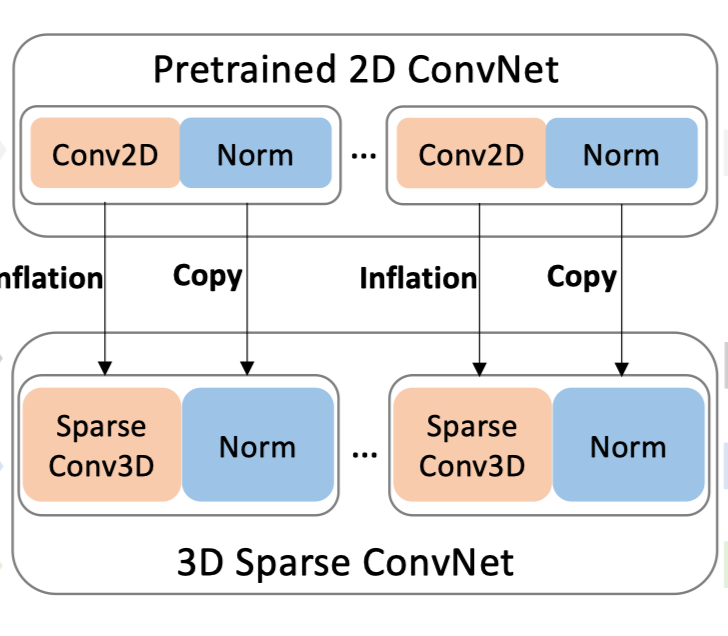

1.1 3D perception

|

|

|

|

|

|

|

|

|

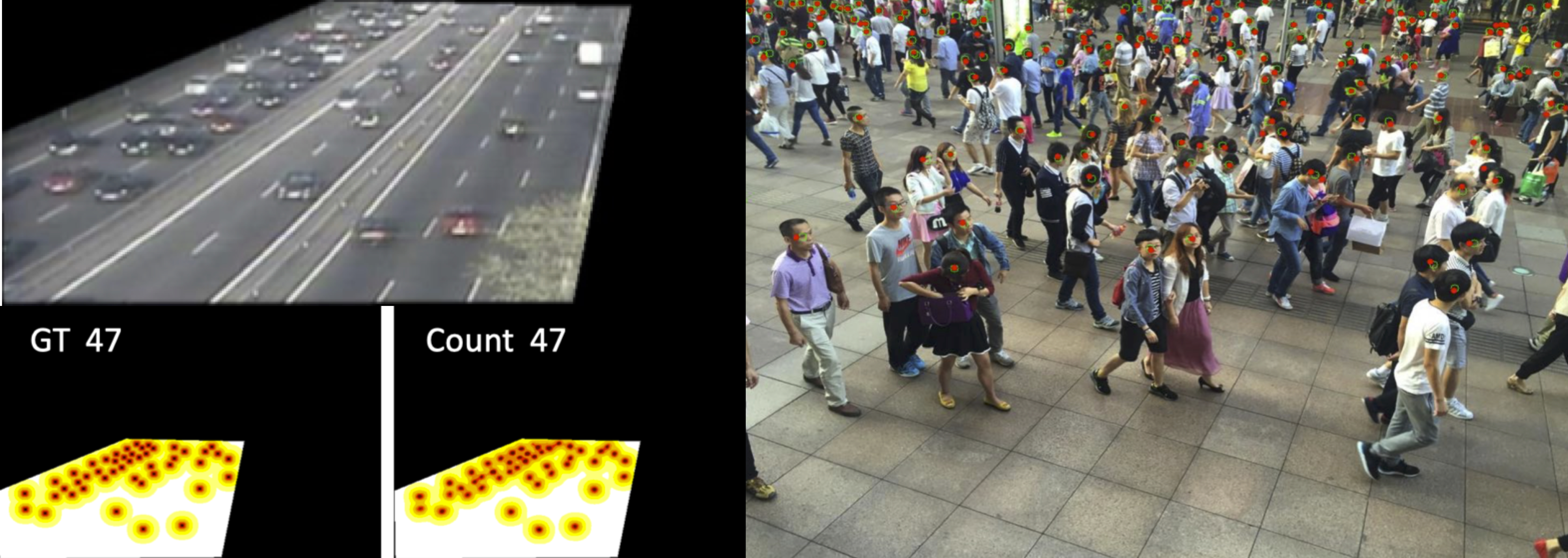

1.2 2D perception

|

|

|

AutoScale arxiv: crowd counting and localization. |

1.3 Occluded object tracking and inference

- Occluded object tracking by inferring from motions of its surrounding objects: Trans-ITS, IV18.

- Social perception to infer existence of occluded objects from behavior of others: IV19, TechXplore.

- Cooperative perception to achieve better detection and tracking of occluded object via connectivity.

2. Mapping and Localization

We consider two aspects in mapping/localization research, namely, localization-oriented including point cloud map construction and localization based on multi-sensor fusion, as well as semantic-oriented to understand the scene/road and construct HD maps for prediction/planning modules. Our major focus is to automatically construct semantic HD maps by reconstructing the road geometry from low-cost sensor data, and inferring the topology and semantics via road/scene understanding models and rough navigation maps. We also built the UrbanLoco dataset with full sensor suite to test the mapping and localization algorithms in dense urban scenarios such as downtown San Francisco and Hong Kong.

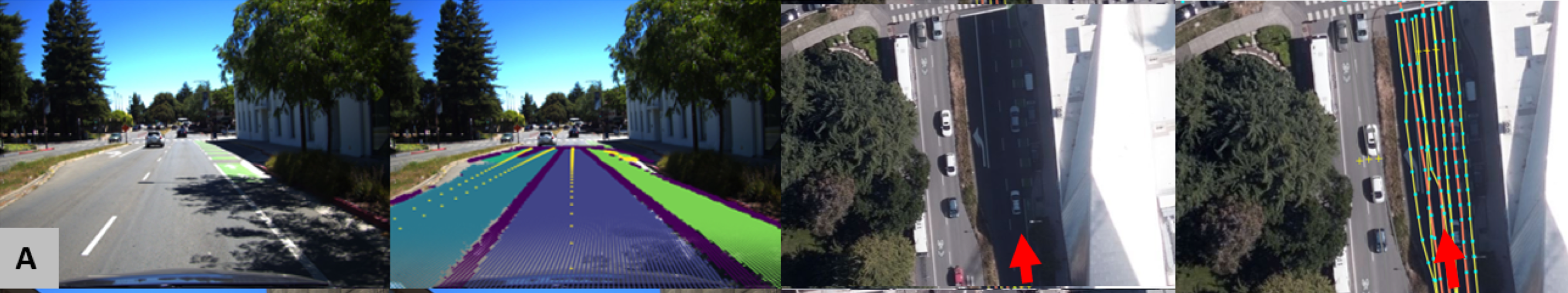

2.1 Automatic HD map construction and scene understanding

|

- Automatic construction of semantic HD map utilizing rough navigation map: paper to appear.

- Road and scene understanding.

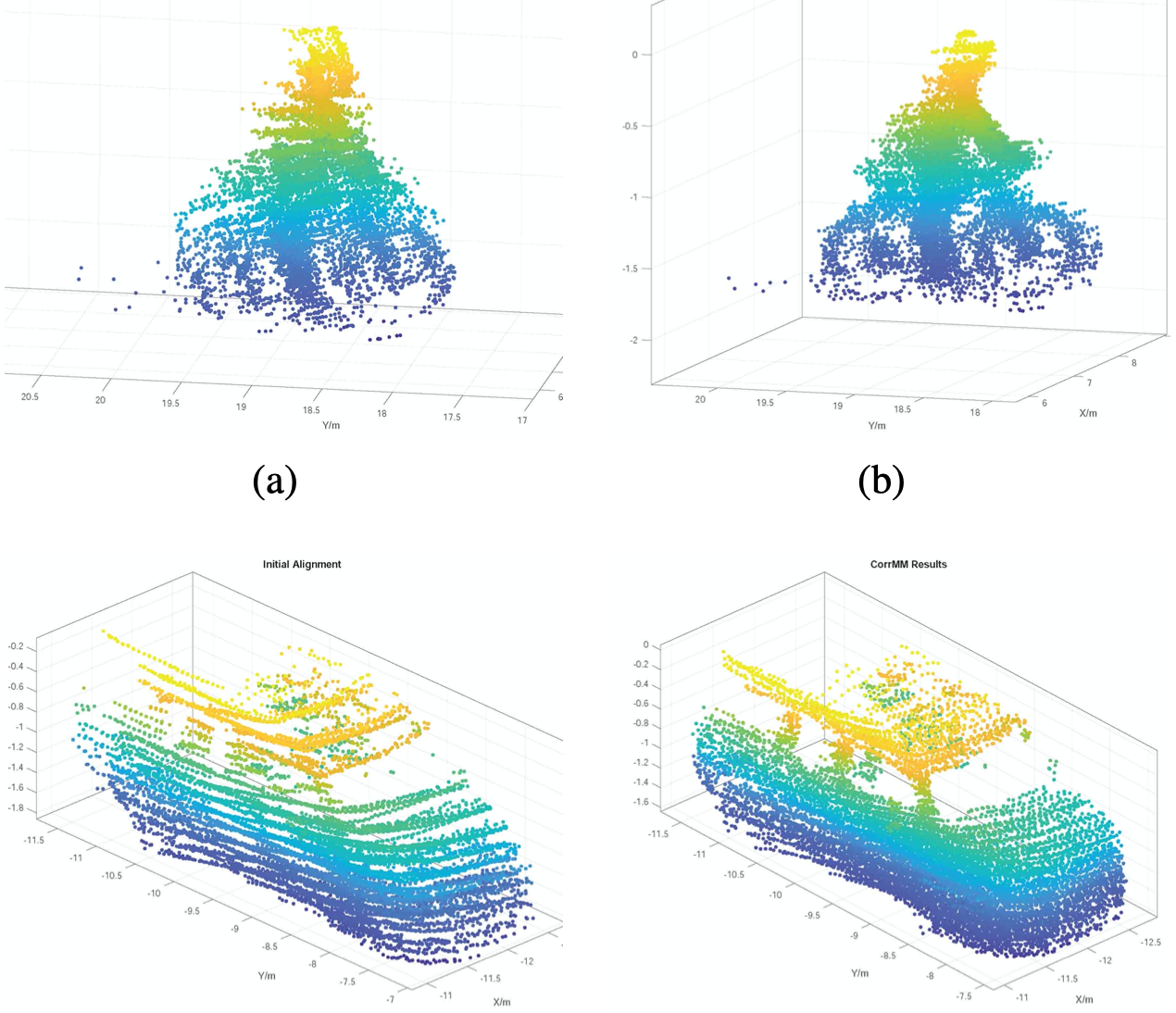

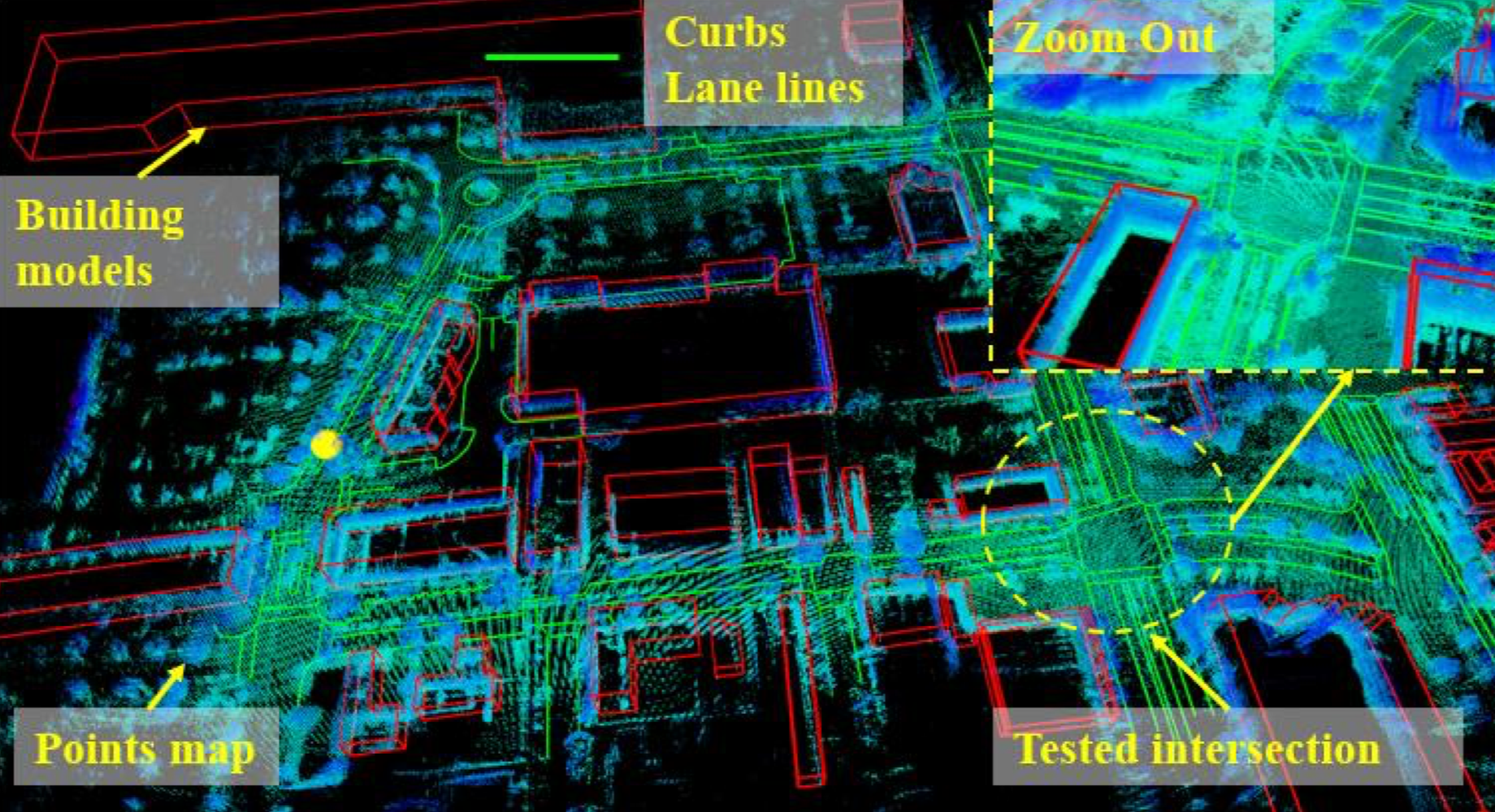

2.2 Localization and point cloud map construction

|

- Mapping and localization dataset in highly urbanized scenes – UrbanLoco dataset: website, dataset paper (ICRA20), blog, video.

|

|

|

|

- Uncertainty estimation in LiDAR-base localization: IET Electronics Letters.

- City-scale mapping and localization with object removal.

3. Behavior Data, Simulation and Evaluation

One of the most challenging tasks in autonomous driving is to understand, predict and react to interactive behavior of surrounding agents in complex scenarios. To enable and support the research in these fields, there are several fundamental aspects to address, including behavior data in highly interactive scenarios with HD maps, as well as structure, metrics, and generation of reactive behavior and scenarios in simulation and test of the prediction/planning algorithms. We construct the INTERACTION dataset, which contains densely interactive/critical behavior from international locations along with the semantic HD maps, which has been widely used and serves as basis for our behavior-related research from simulation, generation, extraction, to prediction, decision and planning. We also proposed closed-loop structure and metrics for prediction with competitions organized. Research efforts are also devoted to the generation of critical and natural behavior, as well as scenarios in simulation.

3.1 Behavior dataset construction

|

|

3.2 Closed-loop evaluation of prediction

- Fatality-aware evaluation of prediction: ITSC18, IV18.

- INTERPRET Challenge as a NeurIPS 2020 competition.

- Closed-loop evaluation of prediction: paper to appear.

3.3 Scenario/behavior generation in simulation and test

- Critical and natural behavior generation of agents in simulation: IROS21.

- Scenario generation with genetic algorithms: paper to appear.

- Human-in-loop simulation and test.

4. Behavior Generation, Analysis, Extraction and Representation

To enable accurate prediction of interactive behavior, or human-like, safe and high-quality reactive decision/planning, or generate natural behavior in simulation, we need to understand and analyze large amount of interactive behavior data, and express, learn and generate human-like reactive behavior from the perspective of fundamental theories to model human behavior and interactions. We bring in game theory as well as other theories in economy/psychology such as cumulative prospective theory, to express and model interactive behavior with irrationalities regarding utilities and risks. These theories are incorporated with machine learning and control, such as inverse reinforcement learning, to make the reward and other key parameters learnable. We also propose models based on unsupervised/multi-task learning for extracting interactive behavior and patterns from human driving data.

4.1 Event extraction and representation learning

- Interactive behavior extraction and unsupervised learning of interaction patterns: RA-Letters/ICRA21.

4.2 Human-like interactive behavior generation and analysis

- Courtesy- and confidence-aware inference and generation for socially-compatible and human-like driving behavior: RA-Letters/ICRA21.

- Human-like behavior generation based on game theoretic strategies: IROS20.

- Expressing irrationality of human behaviors via a risk-sensitive inverse reinforcement learning: ITSC19.

- Bounded risk-sensitive Markov games considering irrationality for both policy design and reward learning: AAAI21.

- Multi-agent inverse reinforcement learning by inferring latent intelligence levels of game: IROS21.

4.3 Reward learning

- Efficient sampling-based maximum entropy inverse reinforcement learning: RA-Letters/IROS20.

- Probabilistic reward learning and online inference for diverse driving behavior: IROS20.

- Learning generic and suitable cost functions for driving: ICRA20.

5. Behavior Prediction

Interactive behavior prediction is a prerequisite for the decision and planning in highly interactive scenarios, which is very challenging. We proposed various models based on graph neural network, conditional variational auto-encoder and other deep generative models to enable interactive prediction for multiple, heterogeneous agents (vehicle, pedestrians, etc.). We also proposed planning-based prediction methods based on inverse reinforcement learning, which inherently predicts the reaction of others given future plans of the ego vehicle. Furthermore, we dive into the generic representation of the semantics for all static and dynamic elements in a variety of driving scenarios, and encode such representation to a semantic graph so that zero-shot scenario-transferable prediction is enabled.

5.1 Representation and transferability for prediction

- Semantic representation and prediction: IV18 (Best Student Paper),

- Generic scene/map/motion representation encoded to semantic graph enabling zero-shot scenario-transferable prediction: arxiv.

5.2 Interactive prediction

- Prediction based on graph neural network: NeurIPS20, arxiv.

- Conditional variational auto-encoder (ITSC18) and its interpretability (IV19, TechXplore).

- Deep generative models (IV19, ICRA19-1, ICRA19-2, IROS19) incorporating vehicle kinematics (IV19).

- Probabilistic graphical model based on hidden Markov model (ITSC18) and dynamic Bayesian network (ITSC19).

5.3 Planning-based reaction prediction

- Hierarchical inverse reinforcement learning predicting reactions given ego plan: ITSC18.

- A better prediction model by online combination of deep learning with inverse reinforcement learning: ITSC19.

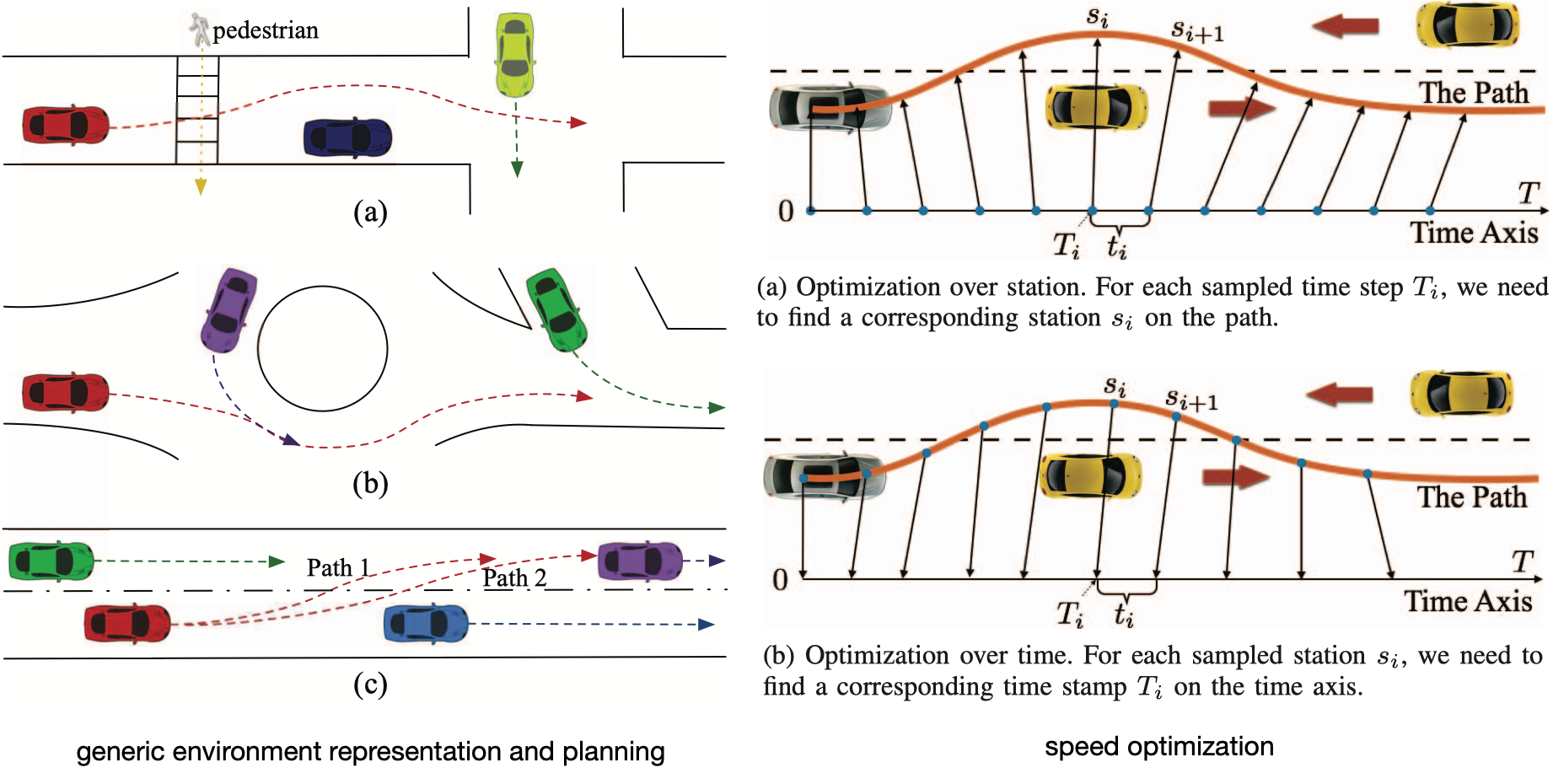

6. Decision and Behavioral Planning

Autonomous vehicles need to make decisions and plan behavior interactively, which are safe and human-like with desirable driving quality. One of our focuses is to design the structure of interactive decision and planning with probabilistic prediction based on POMDP, reachability and optimization/sample-based planners. Learning-based decision under uncertainty methods are proposed with hierarchical or modularized structure. We also address the combination of machine learning (imitation/reinforcement learning) and model-based methods (model predictive control/optimal control) to utilize the advantages of both.

6.1 Interactive decision and planning with probabilistic prediction

- Integrated decision and planning to achieve non-conservatively defensive strategy: ITSC16, IV18.

- Prediction-based reachability for safe decision and planning: ICRA21.

- Interactive decision and planning under uncertainty with POMDP (DSCC20) and optimization-based negotiation (IV18, ACC19).

- Robustly safe automated driving system: ACC16, SAE17.

6.2 Safe and human-like decision and planning incorporating learning and model-based methods

- Safe and feasible imitation learning: IECON17, DSCC18, IROS19.

- Hierarchical framework with safe planning and reinforcement learning decision-making: ICRA21.

- Imitating social behavior with courtesy via inverse optimal control: IROS18.

6.3 Learning-based decision under uncertainty

- Hierarchical reinforcement learning (IV18) and modularized network for driving policy (IV19).

- Deep reinforcement learning: IROS19 (Best Paper Finalist), ITSC19, Trans-ITS.

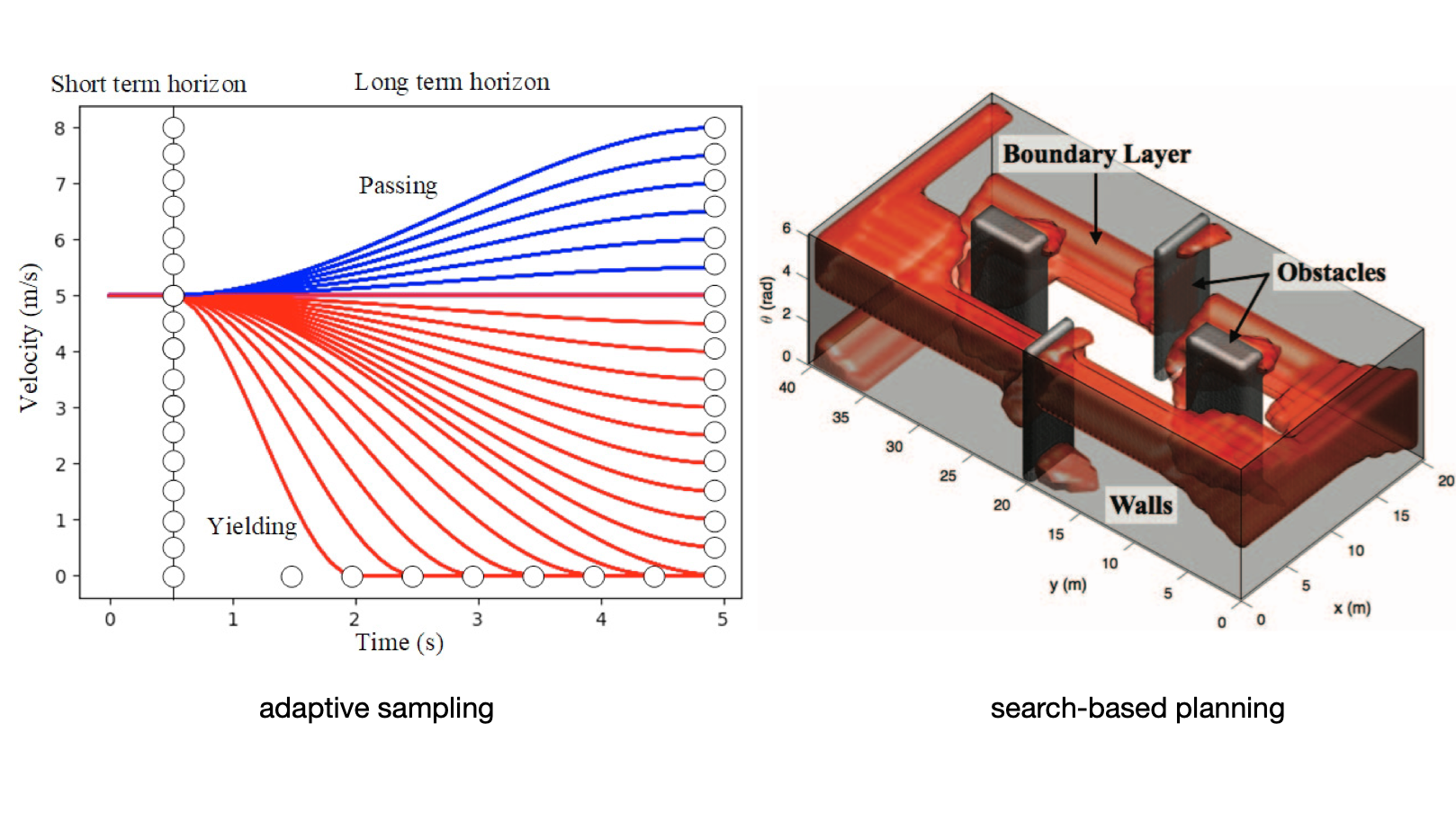

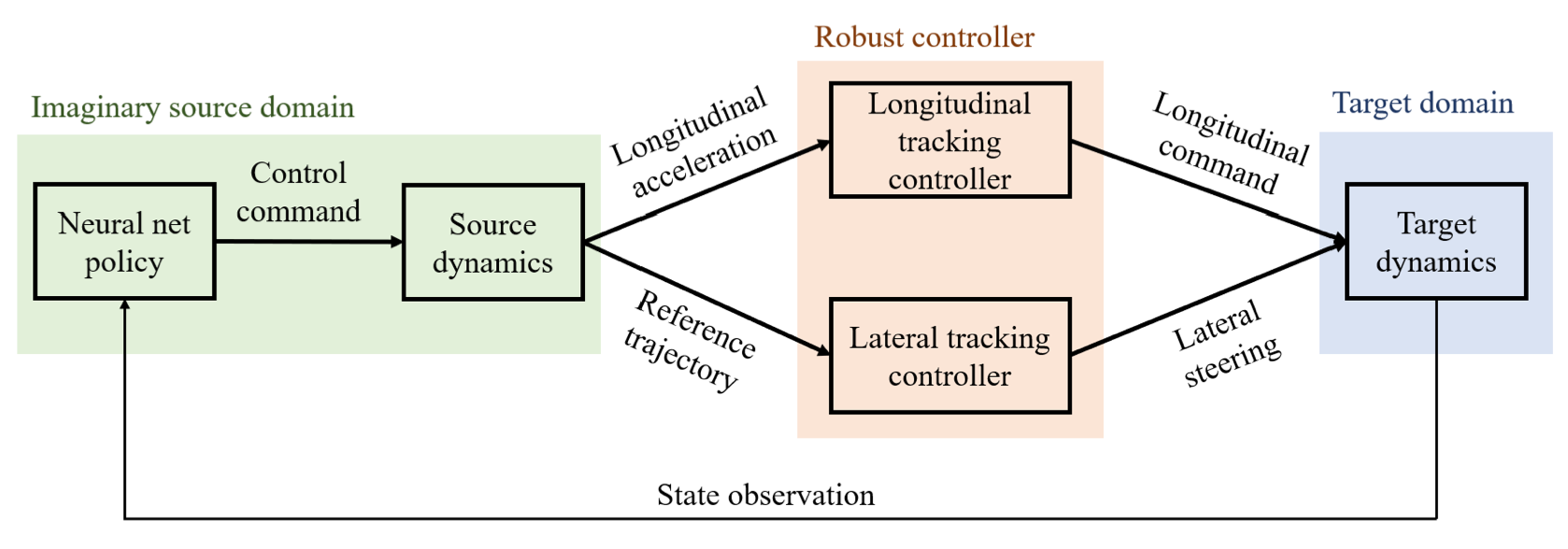

7. Motion Planning and Control

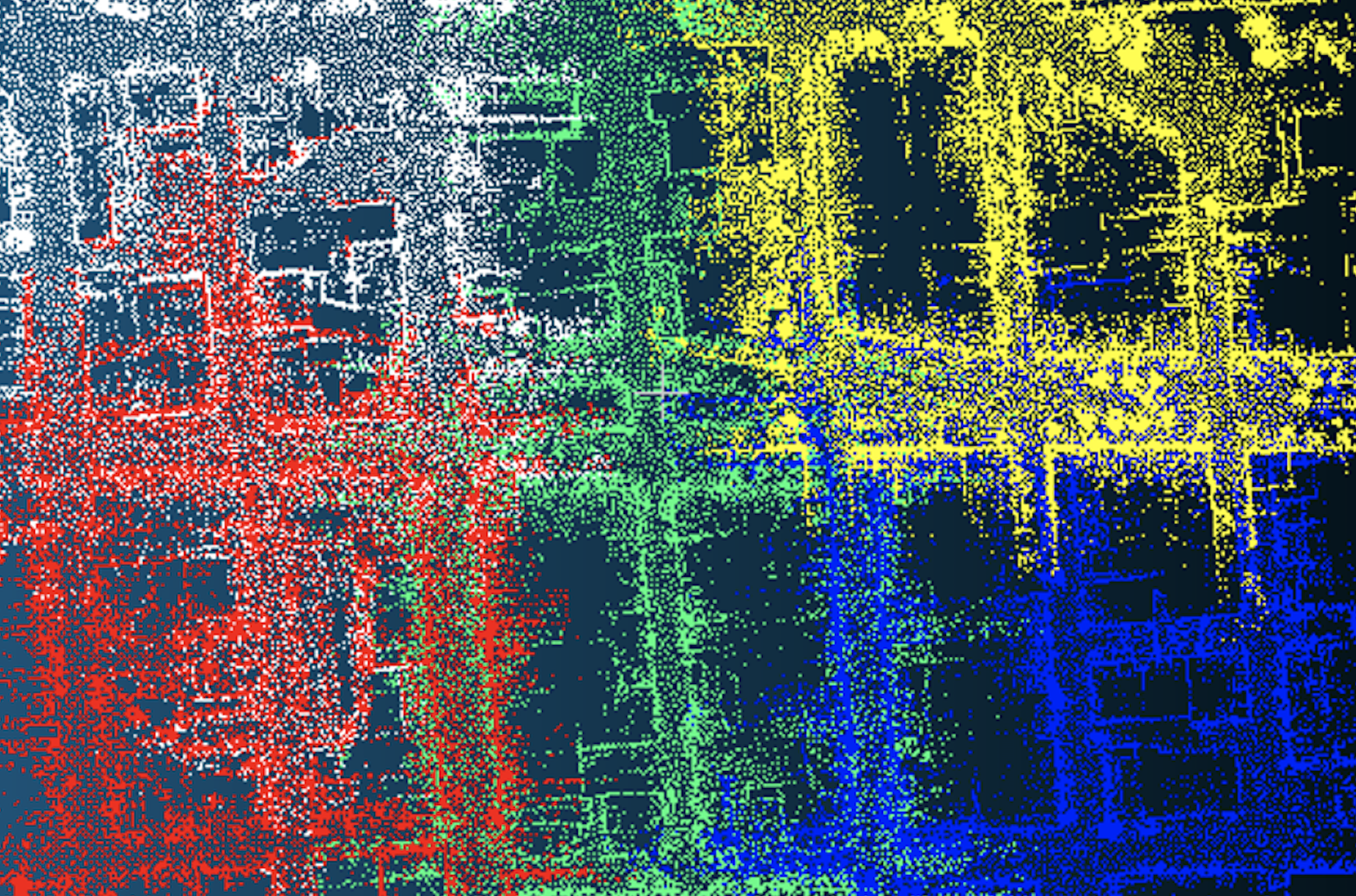

Safe and high-quality trajectory planning is crucial for autonomy. We proposed various approaches based on optimal control, optimization, sampling and graph search to enable such capabilities. Generic scene representation with novel partition of the spatiotemporal domain for planning is also proposed to cover a wide variety of scenarios. In addition to the conventional vehicle dynamics and control research, we also combine it with learning-based methods to enable policy transfer over different vehicle platforms and environments. Moreover, autonomous racing becomes a major application for our planning and control related research due to the sophisticated vehicle dynamics and complex interaction, and we build autonomous racers beating human expert drivers. Autonomous delivery is another focus of our dynamics and control research with significantly varying environments.

7.1 Trajectory planning

|

|

|

|

- Generic scene representation and spatiotemporal domain patition: IV17.

- Optimal-control-based method – constrained iterative LQR: Trans-IV, ITSC17, DSCC20, IROS21.

- Temporal optimization for speed planning (IV17) and optimization-based planning (ACC18)

- Planning based on adaptive sampling (IFAC20) and graph search (IV17).

7.2 Vehicle dynamics and control

|

|

|

|

Autonomous racing: Planning and control tackling sophisticated vehicle dynamics, beating human expert drivers: paper to appear. |

|

|

|

Activities

Workshops & competitions in flagship conferences

Machine learning and computer vision community

- NeurIPS 2020 Competition (December 2020) We organized a behavior prediction challenge in highly interactive driving scenarios, i.e., the INTERPRET Challenge, as a regular competition at NeurIPS 2020. The INTERACTION Dataset will be used in the challenge.

- CVPR 2020 Workshop (June 2020) The results of the first round INTERPRET Challenge will also be presented in CVPR 2020 Workshop organized by Waymo: Scalability in Autonomous Driving.

- ICML 2020 Workshop (July 2020) Wei Zhan was invited as a speaker to present the INTERACTION Dataset and INTERPRET Challenge in the ICML 2020 Workshop on AI for Autonomous Driving.

Robotics community

- RSS 2020 Workshop (June 2020): Interaction and Decision-Making in Autonomous-Driving, organized by Rowan McAllister, Liting Sun, Igor Gilitschenski, and Daniela Rus.

- IROS 2019 Workshop (Macao, November 2019): Benchmark and Dataset for Probabilistic Prediction of Interactive Human Behavior, organized by Wei Zhan, Liting Sun and Masayoshi Tomizuka.

Intelligent transportation/vehicle community

- IV 2020 Joint Workshops (Las Vegas, postponed to October 2020): From Benchmarking Behavior Prediction to Socially Compatible Behavior Generation in Autonomous Driving, organized by Wei Zhan, Liting Sun, Maximilian Naumann, Jiachen Li and Masayoshi Tomizuka.

- ITSC 2020 Workshop (September 2020): Probabilistic Prediction and Comprehensible Motion Planning for Automated Vehicles – Approaches and Benchmarking, organized by Maximilian Naumann, Martin Lauer, Liting Sun, Wei Zhan, Masayoshi Tomizuka, Arnaud de La Fortelle, Christoph Stiller.

- IV 2019 Workshop (Paris, June 2019): Prediction and Decision Making for Socially Interactive Autonomous Driving, organized by Wei Zhan, Jiachen Li, Liting Sun, Yeping Hu and Masayoshi Tomizuka.

Joining Our Group

Please send an email to Professor Masayoshi Tomizuka (tomizuka@berkeley.edu) and Dr. Wei Zhan (wzhan@berkeley.edu) if you are interested in our Research Topics and joining our group.

- We are welcoming Berkeley students to directly work with us, or students out of Berkeley to visit us. We also accept virtual visit to work with us remotely for those with difficulties to conduct a physical visit. Please note that an experience will not be recognized without a formal interview and approval by the faculty and postdocs.

- For prospective Ph.D. students, please apply to the Mechanical Engineering Department of UC Berkeley by December 1st and send an email to address your strength and interest.

Please make sure that the following aspects are well covered in your application email.

- Indicate in the email on your 1) primary goal of the research experience and particular interests; 2) start and end dates for working with us; 3) uniqueness and strength on research experiences/publications and/or skills and knowledge; 4) long-term/career goals.

- Attach a CV including your 1) home university, major, GPA and ranking; 2) research/working experiences; 3) publications/patents (if any); 4) skill set on coding/software/hardware and corresponding proficiency; 5) knowledge set on methods/algorithms.

- Attach a brief introduction (within 5 pages of slides) showing the core methods/algorithms and main results and demos of your previous research or working experiences. Links to cloud storage are welcome for large files.

- Attach all publications (including submitted paper) or well-formatted project final reports if any. Links to cloud storage or online publications are welcome.

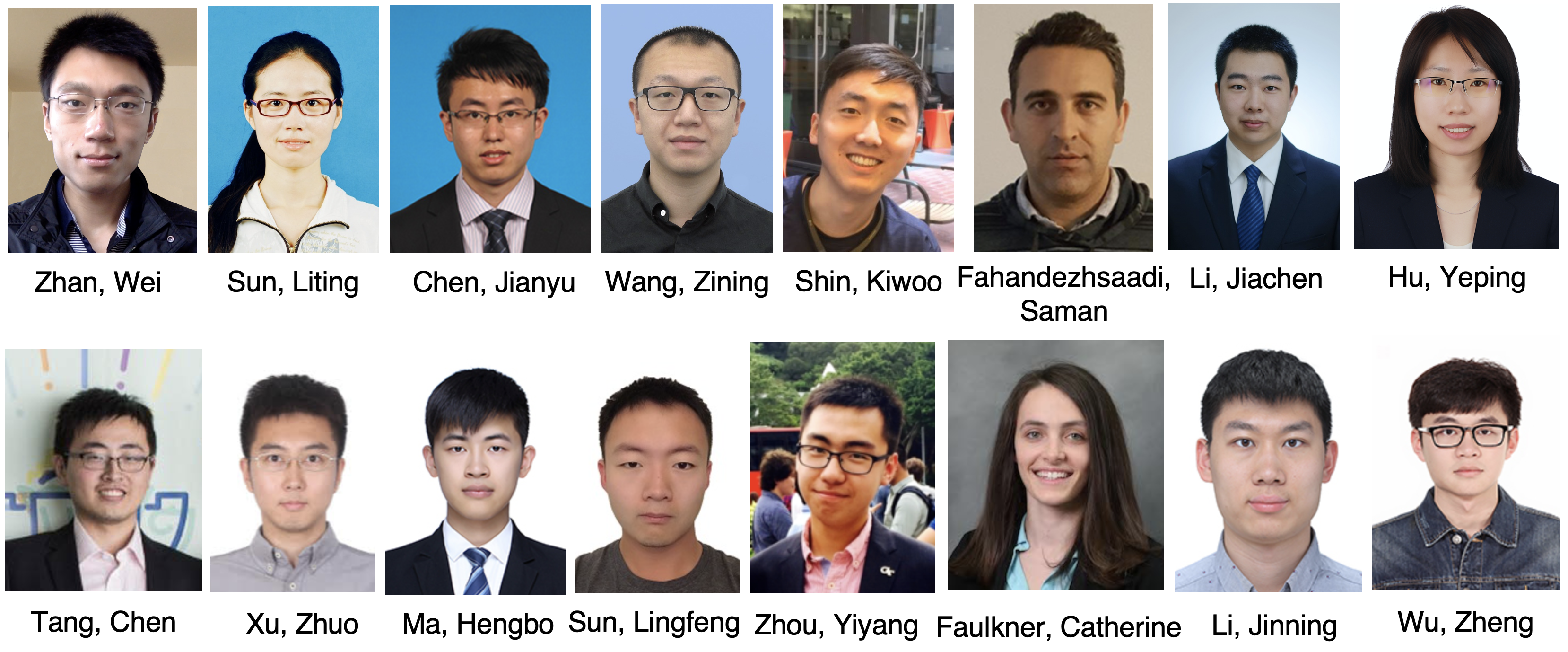

Members and Contact

The group lead is Dr. Wei Zhan. The research activities are coordinated by 2 Postdocs (Dr. Wei Zhan and Dr. Liting Sun) and conducted by 14 Ph.D. students (following headshots) with over 10 visiting researchers.

Please contact Dr. Wei Zhan (wzhan@berkeley.edu) and Professor Masayoshi Tomizuka (tomizuka@berkeley.edu) for more information if you are interested in the topics above by

- working with us (join/visit the group or collaborate) from academia, or

- research collaboration from industry.